python爬蟲:爬取獵聘網站職位詳情

2020-10-29 10:00:29

python爬蟲:爬取獵聘網站職位詳情

第一次學習python,也是剛開始學習爬蟲,完成的第一個範例,記錄一下。

baseurl.py

# @author centao

# @time 2020.10.24

class findbaseurl:

def detbaseurl(self):

dqs = ""

indexcity = 1

indexoccupation = 1

while (indexoccupation == 1):

print("請輸入想要查詢的職位:資料探勘,心理學,java,web前端工程師")

occupation = input()

if occupation != "資料探勘" and occupation != "心理學" and occupation != "java" and occupation != "web前端工程師":

print("職位選擇錯誤!")

indexoccupation = 1

else:

self.occu=occupation

indexoccupation = 0

while (indexcity == 1):

print("請輸入城市:北京,上海,廣州,深圳,杭州")

city = input()

if city == "北京":

dqs = "010"

indexcity = 0

self.ci=city

elif city == "上海":

das = "020"

indexcity = 0

self.ci = city

elif city == "廣州":

dqs = "050020"

indexcity = 0

self.ci = city

elif city == "深圳":

dqs = "050090"

indexcity = 0

self.ci = city

elif city == "杭州":

dqs = "070020"

indexcity = 0

self.ci = city

else:

print("輸入城市有誤")

indexcity = 1

url = "https://www.liepin.com/zhaopin/?key=" + occupation + "&dqs=" + dqs + "&curPage="

return url

這是一個簡單的類,裡面是輸入城市,職位,然後生成對應的url。其實也可以不要,我只是單純為了練習一下python類的構造。

demo.py

# @author centao

# @time 2020.10.24

from bs4 import BeautifulSoup #網頁解析

import re #正規表示式,進行文字匹配

import requests #制定URL,獲取網頁資料

from bs4 import BeautifulSoup # 網頁解析

import random

import time

from baseurl import findbaseurl

def main():

u=findbaseurl()

baseurl = u.detbaseurl()

savepathlink=u.occu+u.ci+"link.txt"

savepathdetail=u.occu+u.ci+"詳情.txt"

#1.爬取網頁

urllist=getworkurl(baseurl)

#3.儲存職位詳情連結

savedata(savepathlink,urllist)

a = readlink(savepathlink)

askURL(urllist,savepathdetail)

user_Agent = ['Mozilla/5.0 (Windows NT 10.0; Win64; x64) AppleWebKit/537.36 (KHTML, like Gecko) Chrome/80.0.3987.106 Safari/537.36 Edg/80.0.361.54',

'Mozilla/5.0 (Windows NT 10.0; Win64; x64) AppleWebKit/537.36 (KHTML, like Gecko) Chrome/80.0.3987.87 Safari/537.36',

'Mozilla/5.0 (Windows NT 10.0; Win64; x64; rv:72.0) Gecko/20100101 Firefox/72.0',

'Mozilla/5.0 (Windows NT 10.0; Win64; x64; rv:73.0) Gecko/20100101 Firefox/73.0',

'Mozilla/5.0 (Windows NT 10.0; Win64; x64; rv:74.0) Gecko/20100101 Firefox/74.0',

'Mozilla/5.0 (Windows NT 10.0; Win64; x64; rv:75.0) Gecko/20100101 Firefox/75.0',

'Mozilla/5.0 (Windows NT 10.0; Win64; x64; rv:76.0) Gecko/20100101 Firefox/76.0',

'Mozilla/5.0 (Windows NT 10.0; Win64; x64; rv:77.0) Gecko/20100101 Firefox/77.0',

'Mozilla/5.0 (Windows NT 10.0; Win64; x64; rv:78.0) Gecko/20100101 Firefox/78.0',

'Mozilla/5.0 (Windows NT 10.0; Win64; x64; rv:79.0) Gecko/20100101 Firefox/79.0',

'Mozilla/5.0 (Macintosh; Intel Mac OS X 10_15_6) AppleWebKit/605.1.15 (KHTML, like Gecko) Version/14.0 Safari/605.1.15',

'Mozilla/5.0 (Macintosh; Intel Mac OS X x.y; rv:42.0) Gecko/20100101 Firefox/42.0',

'Mozilla/5.0 (X11; Linux x86_64) AppleWebKit/537.36 (KHTML, like Gecko) Chrome/51.0.2704.106 Safari/537.36 OPR/38.0.2220.41',

'Opera/9.80 (Macintosh; Intel Mac OS X; U; en) Presto/2.2.15 Version/10.00',

'Mozilla/5.0 (compatible; MSIE 9.0; Windows Phone OS 7.5; Trident/5.0; IEMobile/9.0)',

'Mozilla/5.0 (Windows NT 6.1; Win64; x64; rv:47.0) Gecko/20100101 Firefox/47.0',

'Mozilla/5.0 (Macintosh; Intel Mac OS X 10_12_6) AppleWebKit/537.36 (KHTML, like Gecko) Chrome/61.0.3163.100 Safari/537.36',

'Mozilla/5.0 (Windows NT 6.1; WOW64) AppleWebKit/537.36 (KHTML, like Gecko) Chrome/39.0.2171.95 Safari/537.36 OPR/26.0.1656.60',

'Mozilla/5.0 (Windows NT 5.1; U; en; rv:1.8.1) Gecko/20061208 Firefox/2.0.0 Opera 9.50',

'Mozilla/4.0 (compatible; MSIE 6.0; Windows NT 5.1; en) Opera 9.50',

'Mozilla/5.0 (Windows NT 6.1; WOW64; rv:34.0) Gecko/20100101 Firefox/34.0',

'Mozilla/5.0 (X11; U; Linux x86_64; zh-CN; rv:1.9.2.10) Gecko/20100922 Ubuntu/10.10 (maverick) Firefox/3.6.10',

'Mozilla/5.0 (Windows NT 6.1; WOW64) AppleWebKit/534.57.2 (KHTML, like Gecko) Version/5.1.7 Safari/534.57.2',

'Mozilla/5.0 (Windows NT 6.1; WOW64) AppleWebKit/537.36 (KHTML, like Gecko) Chrome/39.0.2171.71 Safari/537.36',

'Mozilla/5.0 (X11; Linux x86_64) AppleWebKit/537.11 (KHTML, like Gecko) Chrome/23.0.1271.64 Safari/537.11',

'Mozilla/5.0 (Windows; U; Windows NT 6.1; en-US) AppleWebKit/534.16 (KHTML, like Gecko) Chrome/10.0.648.133 Safari/534.16',

'Mozilla/5.0 (Windows NT 6.1; WOW64) AppleWebKit/537.36 (KHTML, like Gecko) Chrome/30.0.1599.101 Safari/537.36',

'Mozilla/5.0 (Windows NT 6.1; WOW64; Trident/7.0; rv:11.0) like Gecko',

'Mozilla/5.0 (Windows NT 6.1; WOW64) AppleWebKit/537.1 (KHTML, like Gecko) Chrome/21.0.1180.71 Safari/537.1 LBBROWSER',

'Mozilla/5.0 (compatible; MSIE 9.0; Windows NT 6.1; WOW64; Trident/5.0; SLCC2; .NET CLR 2.0.50727; .NET CLR 3.5.30729; .NET CLR 3.0.30729; Media Center PC 6.0; .NET4.0C; .NET4.0E; LBBROWSER)',

'Mozilla/5.0 (compatible; MSIE 9.0; Windows NT 6.1; WOW64; Trident/5.0; SLCC2; .NET CLR 2.0.50727; .NET CLR 3.5.30729; .NET CLR 3.0.30729; Media Center PC 6.0; .NET4.0C; .NET4.0E; QQBrowser/7.0.3698.400)',

'Mozilla/4.0 (compatible; MSIE 6.0; Windows NT 5.1; SV1; QQDownload 732; .NET4.0C; .NET4.0E)',

'Mozilla/5.0 (Windows NT 5.1) AppleWebKit/535.11 (KHTML, like Gecko) Chrome/17.0.963.84 Safari/535.11 SE 2.X MetaSr 1.0',

'Mozilla/4.0 (compatible; MSIE 7.0; Windows NT 5.1; Trident/4.0; SV1; QQDownload 732; .NET4.0C; .NET4.0E; SE 2.X MetaSr 1.0)']

proxy_list=[{'HTTP':'http://61.135.185.156:80'},

{'HTTP':'http://61.135.185.111:80'},

{'HTTP':'http://61.135.185.112:80'},

{'HTTP':'http://61.135.185.160:80'},

{'HTTP':'http://183.232.231.239:80'},

{'HTTP':'http://202.108.22.5:80'},

{'HTTP':'http://180.97.33.94:80'},

{'HTTP':'http://182.61.62.74:80'},

{'HTTP':'http://182.61.62.23:80'},

{'HTTP':'http://183.232.231.133:80'},

{'HTTP':'http://183.232.231.239:80'},

{'HTTP':'http://220.181.111.37:80'},

{'HTTP':'http://183.232.231.76:80'},

{'HTTP':'http://202.108.23.174:80'},

{'HTTP':'http://183.232.232.69:80'},

{'HTTP':'http://180.97.33.249:80'},

{'HTTP':'http://180.97.33.93:80'},

{'HTTP':'http://180.97.34.35:80'},

{'HTTP':'http://180.97.33.249:80'},

{'HTTP':'http://180.97.33.92:80'},

{'HTTP':'http://180.97.33.78:80'}]

#得到指定一個URL的網頁內容

def askmainURL(url):

#模擬瀏覽器頭部資訊

head={"User-Agent": "Mozilla/5.0 (Windows NT 10.0; Win64; x64) AppleWebKit/537.36 (KHTML, like Gecko) Chrome/85.0.4183.83 Safari/537.36"}

html=requests.get(url=url)

return html

#得到工作職位的詳情連結

def getworkurl (baseurl):

urllist=[]

for a in range(1,5):

url = baseurl+str(a)

html = askmainURL(url)

soup = BeautifulSoup(html.text, "html.parser")

soup = soup.find_all("h3")

for i in soup:

if i.has_attr("title")!=0:

if i.has_attr("title"):

href = i.find_all("a")[0]["href"]

if re.search("https", href):

urllist.append(href)

else:

href = "https://www.liepin.com" + href

urllist.append(href)

else:

continue

return urllist

#儲存資料

# -------------------------爬取detail-------------------------------------------

def readlink(savepathlink):

file = open(savepathlink, mode='r')

contents = file.readlines()

file.close()

return contents

def askURLsingle(url):

heads = {"User-Agent": random.choice(user_Agent)}

# html=requests.get(url= a,headers=heads, timeout=(3,7))

try:

html = requests.get(url=url, headers=heads, proxies=proxy_list[random.randint(0, 20)],timeout=(3,7))

html.encoding = 'utf-8'

return html

except requests.exceptions.RequestException as e:

print(e)

def askURL(url,savepath):

for i in range(len(url)):

print(url[i])

a=url[i]

heads = {"User-Agent": random.choice(user_Agent)}

try:

# html = requests.get(url=a, headers=heads,proxies=proxy_list[random.randint(0, 20)], timeout=(3, 7))

html = requests.get(url=a, headers=heads,timeout=(3,7))

html.encoding='uft-8'

soup = BeautifulSoup(html.text, "html.parser")

item = soup.find_all("div", class_="content content-word")

time.sleep(1)

except requests.exceptions.RequestException as e:

print(e)

continue

if len(item) != 0:

item=item[0]

item=item.text.strip()

savedetail(savepath,item)

else:

a=0

while(len(item)==0 and a<10):

h=askURLsingle(url[i])

soup = BeautifulSoup(h.text, "html.parser")

item = soup.find_all("div", class_="content content-word")

a=a+1

if len(item) != 0:

item = item[0]

item = item.text.strip()

savedetail(savepath, item)

def savedetail(savepath,data):

file=open(savepath,mode='a',errors='ignore')

file.write(data+'\n')

file.close()

def savedata(savepath,urllist):

file=open(savepath,mode='a')

for item in urllist:

file.write(item+'\n')

file.close()

if __name__ == '__main__':

main()

demo.py裡面是爬取職位詳情連線和職位詳情頁的程式碼

createdict.py

#coding:utf-8

# @author centao

# @time 2020.10.24

import re

import jieba.analyse as analyse

import jieba

# with open('java上海詳情.txt','r',encoding='GBK')as f:

# text=f.read()

# 清洗資料:

# # 去除序號

def main():

print("輸入職位")

a=input()

create(a)

def create(a):

text=read(a+'上海詳情.txt')+read(a+'北京詳情.txt')+read(a+'深圳詳情.txt')+read(a+'廣州詳情.txt')+read(a+'杭州詳情.txt')

text=str(text)

text=re.sub(r'([0-9 a-z]+[\.\、,,))])|( [0-9]+ )|[;;]', '',text) # 去除序號等無關數位

text=re.sub(r'[,、。【】()/]', ' ',text)# 去除標點

stopword=['熟悉','需要','崗位職責','職責描述','工作職責','任職','優先','專案','團隊','產品','相關','任職','業務','要求','檔案','工作','能力',

'優化','需求','並行','經驗','完成','具備','職責','具有','應用','平臺','參與','編寫','瞭解','調優','使用','服務','程式碼','效能', '快取',

'中介軟體','解決','海量', '場景', '技術', '使用者', '進行', '負責', '領域','系統','構建', '招聘', '專業', '課程', '公司', '員工', '人才', '學習', '組織', '崗位',

'薪酬', '運營', '制定', '體系', '發展', '完善', '提供', '學員', '學生', '流程', '定期', '行業', '描述', '策劃', '內容', '協助', '方案']

keyword=jieba.lcut(text,cut_all=False)

out=' ' #清洗完之後的結果

keywords=" ".join(keyword)

for word in keyword:

if word not in stopword:

if word !='\t':

out += word

out += " "

#TF-IDF分析

tf_idf=analyse.extract_tags(out,

topK=100,

withWeight=False,

allowPOS=( "n", "vn", "v"))

print(tf_idf)

savedata(a+'字典.txt',tf_idf)

def read(savepathlink):

file = open(savepathlink, mode='r')

contents = file.readlines()

file.close()

return contents

def savedata(savepath,urllist):

file=open(savepath,mode='a')

for item in urllist:

file.write(item+'\n')

file.close()

if __name__ == '__main__':

main()

這是建立字典的程式碼,使用了jieba和TF-IDF分析,為了資料淨化準備。

fenci_ciyun.py

#coding:utf-8

# @author centao

# @time 2020.10.24

from wordcloud import WordCloud

import matplotlib.pyplot as plt

import re

import numpy as np

from PIL import Image

import jieba

# 資料獲取

def main():

print("輸入職業:")

occu=input()

picture(occu)

def read(savepathlink):

file = open(savepathlink, mode='r',errors='ignore')

contents = file.readlines()

file.close()

return contents

def picture(occu):

text = read(occu + '上海詳情.txt') + read(occu + '北京詳情.txt') + read(occu + '深圳詳情.txt') + read(occu + '廣州詳情.txt') + read( occu + '杭州詳情.txt')

text = str(text)

# 清洗資料:

# 去除序號

text=re.sub(r'([0-9 a-z]+[\.\、,,))])|( [0-9]+ )|[;;]', '',text)

#去除標點符號

text=re.sub(r'[,、。【】()/]', ' ',text)

keyword=jieba.lcut(text,cut_all=False)

tx=read(occu+"字典.txt")

out=''

for word in keyword:

if word+'\n' in tx:

out += word

out +=" "

else:

continue

# keywords=" ".join(keyword)

mask=np.array(Image.open('2.jpg'))

font=r'C:\Windows\Fonts\simkai.ttf'

wc=WordCloud(

font_path=font,#使用的字型庫

margin=2,

mask=mask,#背景圖片

background_color='white', #背景顏色

max_font_size=300,

# min_font_size=1,

width=5000,

height=5000,

max_words=200,

scale=3

)

wc.generate(out) #製作詞雲

wc.to_file(occu+'詞雲.jpg') #儲存到當地檔案

# 圖片展示

plt.imshow(wc,interpolation='bilinear')

plt.axis('off')

plt.show()

if __name__ == '__main__':

main()

這是最終根據各個詳情txt檔案以及建立的字典,構建詞雲圖的程式碼。

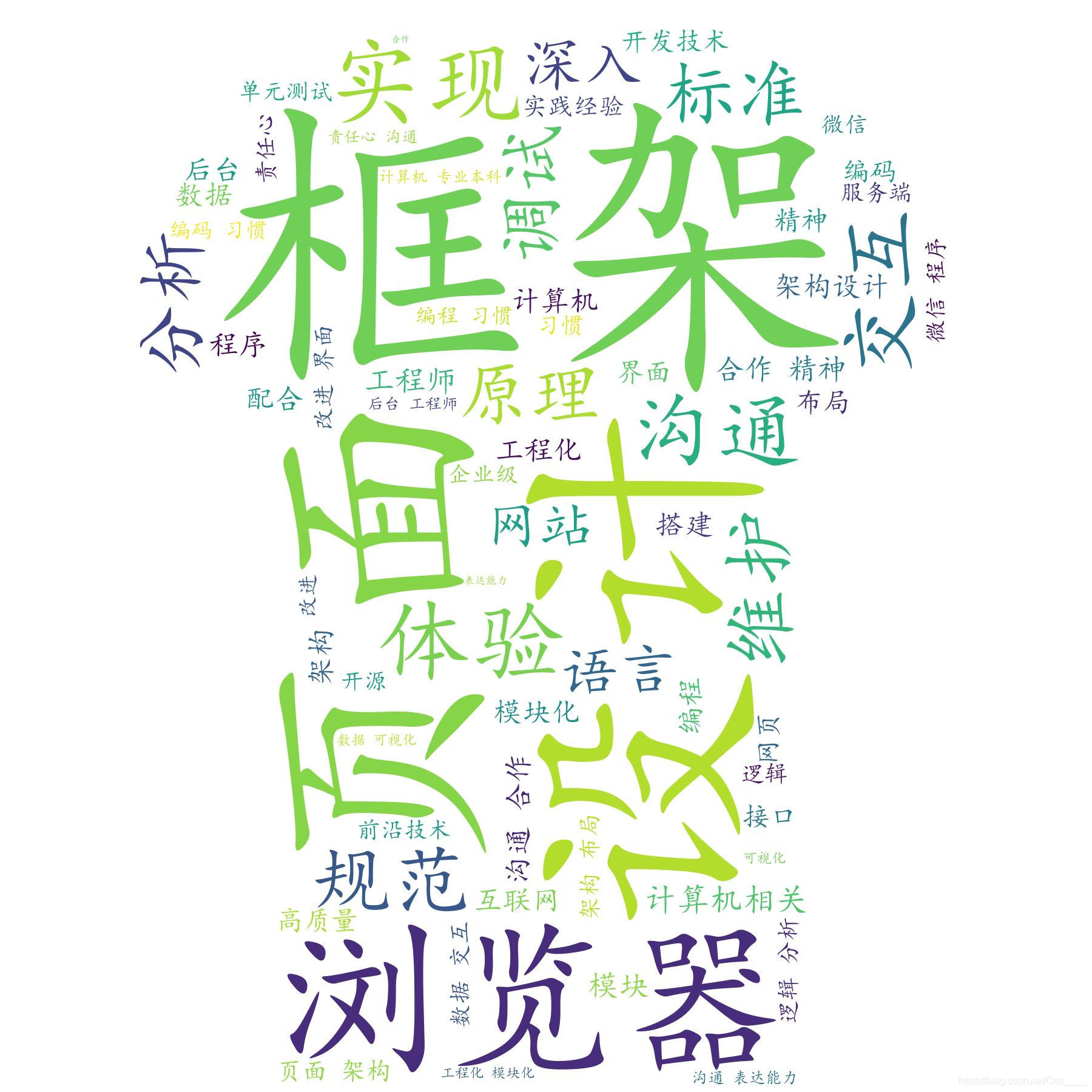

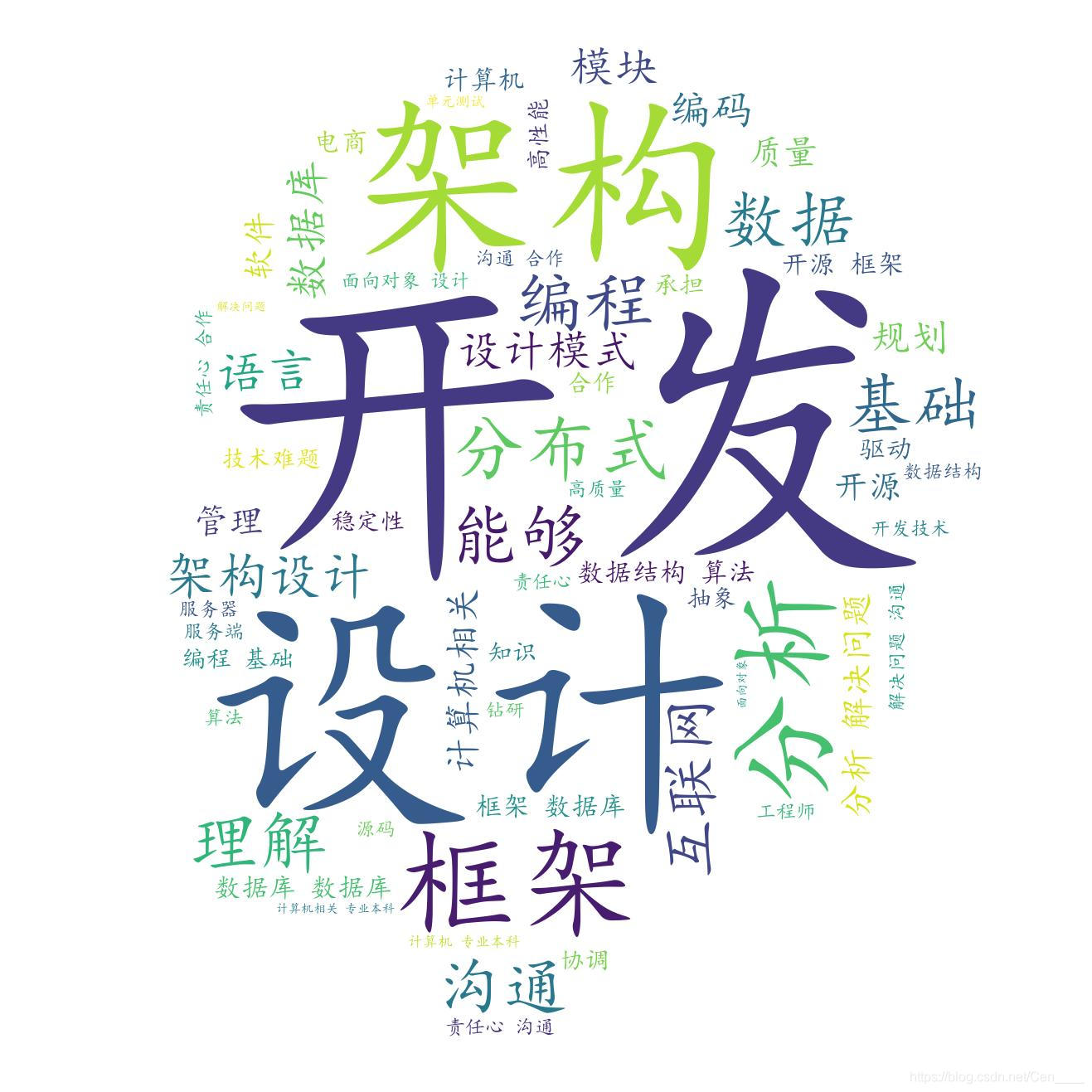

如下圖所示是web前端工程師詞雲圖和java詞雲圖